CaseStudies

MassGen v0.1.1: Custom Tools with GitHub Issue Market Analysis

MassGen is focused on case-driven development. MassGen v0.1.1 introduces a comprehensive custom tools system that enables users to extend agent capabilities with domain-specific Python functions. This case study demonstrates Self-Evolution through Market Analysis where agents use custom tools combined with web search to analyze GitHub issues, research market trends, and autonomously drive feature prioritization.

:depth: 3

:local:

(planning-phase)=

📋 PLANNING PHASE

(evaluation-design)=

📝 Evaluation Design

Prompt

The prompt tests whether MassGen agents can autonomously analyze their own codebase, GitHub issues, and market trends to drive product development:

Analyze the massgen dir and MassGen GitHub issues to understand what features users are requesting. Also research current trends in multi-agent AI systems and LLM orchestration. Based on the existing code, the open issues and market trends, write a prioritized recommendation report for the next release.

This prompt requires agents to:

- Access and analyze the MassGen codebase (via filesystem access)

- Fetch and analyze GitHub issues (via custom tool)

- Research market trends (via web search)

- Synthesize all sources into actionable recommendations

Baseline Config

Prior to v0.1.1, MassGen lacked a native custom tools system. Users had two main options for extending agents with domain-specific capabilities like GitHub API integration:

- MCP Servers: Use Model Context Protocol servers (external process with inter-process communication overhead)

- Built-in Tools Only: Rely solely on web search and code execution (limited functionality)

While MCP servers worked, they added operational complexity. There was no simple, native way to add Python functions as tools directly within the MassGen process.

Baseline Command

Pre-v0.1.1 equivalent would require web search only (no custom GitHub tool):

uv run massgen \

--config massgen/configs/basic/multi/gemini_gpt5nano_claude.yaml \

"Research multi-agent AI trends and make recommendations for MassGen's next release."

Limitations:

- No direct GitHub API access

- No structured issue analysis

- Relies solely on web search scraping

- Cannot combine codebase analysis with issue data

(evaluation-analysis)=

🔧 Evaluation Analysis

Results & Failure Modes

Before v0.1.1, users attempting similar tasks would face:

No Custom Tool Integration:

- Cannot easily add domain-specific capabilities (GitHub API, database queries, etc.)

- Potentially high friction for extending agent capabilities

Limited Self-Evolution:

- No mechanism for agents to programmatically access issue tracker

- Web search provides unstructured data vs. API-structured data

- Cannot reliably analyze engagement metrics (comments, reactions, labels)

Example Failure: Asking agents to “analyze GitHub issues” would result in:

- Web search attempting to scrape GitHub web pages (unreliable)

- No access to issue metadata (labels, reactions, comments)

- Incomplete or inaccurate issue categorization

Success Criteria

- Custom Tool System Works: Users can register Python functions as tools via YAML config

- Tool Discovery: Agents automatically discover and use custom tools

- Multi-Source Analysis: Agents combine custom tools + web search + filesystem access

- Structured Data Access: Custom tool provides structured GitHub issue data

- Self-Evolution: Agents demonstrate market-driven feature prioritization

- Multi-Agent Collaboration: Both agents use custom tools and reach consensus

(desired-features)=

🎯 Desired Features

With these goals defined, v0.1.1 implements:

- Custom Tool Registration: Simple YAML configuration for adding Python functions as tools

- Automatic Schema Generation: MassGen generates JSON schemas from function signatures

- ExecutionResult API: Standardized return type for tool outputs (text, images, audio)

- Streaming Support: Tools can stream progress updates for long-running operations

- Cross-Backend Support: Custom tools work with all backends (Gemini, OpenAI, Claude, etc.)

- GitHub Issue Analyzer Tool: Reference implementation demonstrating market analysis capability

(testing-phase)=

🚀 TESTING PHASE

(implementation-details)=

📦 Implementation Details

Version

MassGen v0.1.1

(new-features)=

✨ New Features

Custom Tools System:

massgen/tool/module withToolManagerfor tool registration and execution- Automatic JSON schema generation from Python type hints and docstrings

ExecutionResultAPI for standardized tool outputs- Support for streaming results with progress updates

- Multimodal outputs (text, images, audio)

- Tool categories for organization

- Built-in tools:

run_python_script,run_shell_script,read_file_content,save_file_content,append_file_content

GitHub Issue Analyzer Tool (massgen/tool/_self_evolution/_github_issue_analyzer.py):

- Fetches issues from GitHub API with PR filtering

- Analyzes by category, labels, and engagement (comments + reactions)

- Provides insights: top engaged issues, most requested features, category breakdown

- Streaming progress updates during execution

- Demonstrates Self-Evolution through autonomous market analysis

Configuration Integration:

- Simple YAML

custom_toolsconfiguration - Specify tool path, function name, category, and description

- Works across all backends

New Config

massgen/configs/tools/custom_tools/github_issue_market_analysis.yaml:

agents:

- id: "agent_a"

backend:

type: "gemini"

model: "gemini-2.5-pro"

cwd: "workspace1"

custom_tools:

- name: ["fetch_github_issues"]

category: "market_analysis"

path: "massgen/tool/_self_evolution/_github_issue_analyzer.py"

function: ["fetch_github_issues"]

enable_web_search: true

- id: "agent_b"

backend:

type: "openai"

model: "gpt-5-mini"

cwd: "workspace2"

text:

verbosity: "medium"

reasoning:

effort: "low"

summary: "auto"

custom_tools:

- name: ["fetch_github_issues"]

category: "market_analysis"

path: "massgen/tool/_self_evolution/_github_issue_analyzer.py"

function: ["fetch_github_issues"]

enable_web_search: true

orchestrator:

snapshot_storage: "snapshots"

agent_temporary_workspace: "temp_workspaces"

context_paths:

- path: "massgen"

permission: "read"

voting_sensitivity: "balanced"

answer_novelty_requirement: "lenient"

max_new_answers_per_agent: 5

Command

uv run massgen \

--config massgen/configs/tools/custom_tools/github_issue_market_analysis.yaml \

"Analyze the massgen dir and MassGen GitHub issues to understand what features users are requesting. Also research current trends in multi-agent AI systems and LLM orchestration. Based on the existing code, the open issues and market trends, write a prioritized recommendation report for the next release."

(agents)=

🤖 Agents

- Agent A (agent_a): Gemini 2.5 Pro with custom GitHub issue analyzer tool, web search, and read access to

massgen/codebase - Agent B (agent_b): GPT-5-mini with low reasoning effort, same custom tool, web search, and codebase access

Both agents have:

- Custom Tool:

fetch_github_issuesfor analyzing GitHub repository issues - Web Search: Enabled for market research

- Filesystem Access: Read-only access to

massgen/directory for codebase analysis - Workspace: Dedicated working directories (

workspace1,workspace2)

(demo)=

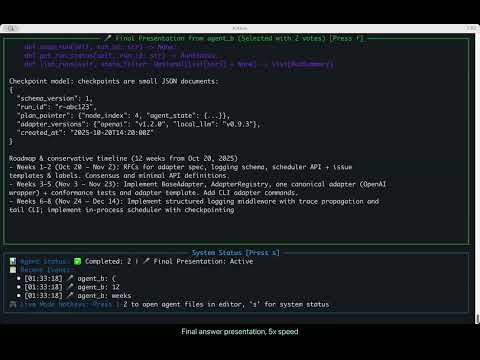

🎥 Demo

Watch the full demonstration of MassGen v0.1.1 custom tools in action:

Click to watch: MassGen v0.1.1: Custom Tools with GitHub Issue Market Analysis

Execution Log: (local, not in repo) .massgen/massgen_logs/log_20251020_012622/

Runtime: ~11 minutes (01:26:23 - 01:37:XX)

(evaluation-and-analysis)=

📊 EVALUATION & ANALYSIS

Results

The v0.1.1 custom tools feature enabled a successful multi-source analysis that would have been more difficult in prior versions.

The Collaborative Process

Agent Behavior:

Both agents followed a comprehensive analysis workflow:

- Codebase Analysis: Used filesystem MCP tools to explore the

massgen/directory structure - GitHub Issue Analysis: Used the custom

fetch_github_issuestool to retrieve and categorize open issues - Market Research: Used web search to research current trends in multi-agent AI and LLM orchestration

- Synthesis: Combined all three sources into prioritized recommendations

Coordination Pattern (from coordination_table.txt):

- Total Coordination Events: 35 events across 5 coordination rounds

- Agent A (Gemini): Provided 1 answer after analyzing all sources

- Agent B (GPT-5-mini): Provided 4 answers across multiple refinement cycles

- Restarts: 9 total restarts (5 by Agent A, 4 by Agent B) demonstrating iterative refinement

The Voting Pattern

Voting Summary:

- Total Votes Cast: 2 votes

- Agent A voted for:

agent2.2andagent2.4(both Agent B answers) - Agent B voted for:

agent2.4(self-vote after refinement)

Voting Rationale (from logs):

Agent A’s vote for agent2.2:

“The agent provided a comprehensive and well-structured…”

Agent A’s vote for agent2.4 (winning answer):

“Agent 2’s answer is exceptionally comprehensive…”

Agent B’s vote for agent2.4:

“Comprehensive analysis of code, issues, and market trends…”

Winner: Agent B (agent_b) selected as winner with answer agent2.4

Answer Evolution Over Time

One of the most interesting aspects of this execution was watching how Agent B’s answers evolved and improved through multiple iterations. This demonstrates the value of multi-agent collaboration and iterative refinement.

Agent B’s Answer Progression (4 iterations):

agent2.1 (First Answer) - 01:29:42

Structure:

- Starts with “Scope and method”

- Lists key findings from three sources

- Provides prioritized recommendations

Characteristics:

- Good breadth, covers all major themes

- 3 priorities: “Plugin/Adapter Registry (MVP)”, “Basic Observability”, “Orchestration primitives”

- Generic deliverables (“Implement a well-documented plugin interface”)

- No executive summary

- Length: ~100 lines

Weakness: Lacks concrete implementation details and actionable next steps.

agent2.2 (Second Answer) - 01:30:14

Improvements:

- ✅ Added “Executive summary (one-paragraph)” at the top

- ✅ Better structure with “What I reviewed and assumptions”

- ✅ More refined priority labels: “MVP → near-term → stretch”

- ✅ More specific examples (“OpenAI-compatible wrapper”, “FAISS or Weaviate-lite”)

- ✅ Added CLI command examples (

massgen adapters list / install / info)

Characteristics:

- Better organization and structure

- More concrete deliverables

- Still somewhat generic on implementation

Vote: Agent A voted for this answer, noting it was “comprehensive and well-structured”

agent2.3 (Third Answer) - 01:30:53

Improvements:

- ✅ Restructured to start with “What I reviewed (concrete artifacts)” - more specific

- ✅ Executive summary becomes “Short executive summary” - more concise

- ✅ Added “Blocking gaps” section - clearer problem statement

- ✅ Better separation of strengths vs. gaps

- ✅ More actionable deliverables

Characteristics:

- Clearer structure

- More focused recommendations

- Better gap analysis

agent2.4 (Fourth Answer - WINNER) - 01:31:32

Major Improvements:

- ✅ Executive summary moved to very top - optimal information hierarchy

- ✅ Added concrete API sketches with Python code examples (

BaseAdapter,LLMAdapter,VectorStoreAdapter) - ✅ Added minimal logging event schema (JSON example)

- ✅ Added roadmap & conservative timeline (3 months, week-by-week breakdown)

- ✅ Added effort estimates (engineer-weeks)

- ✅ Added success metrics (short-term, mid-term, long-term)

- ✅ Added “Immediate next steps I can implement for you” with specific choices

- ✅ Added recommendation for what to pick first with clear rationale

- ✅ Added minimal acceptance criteria for each deliverable

Characteristics:

- Length: ~140 lines (~1.5x longer than first answer)

- Completeness: Implementation-ready with code samples

- Actionability: Specific next steps and effort estimates

- Structure: Professional product roadmap format

Votes: Both Agent A and Agent B voted for this answer

Key Insights from Evolution:

- Information Hierarchy Matters: Moving executive summary to the top (agent2.4) made the answer immediately actionable

- Concrete > Abstract: Adding code examples and specific timelines dramatically improved usefulness

- Actionability Wins: The winning answer provided clear “what to do next” guidance

- Refinement Works: Each iteration built on the previous, adding missing elements

Why agent2.4 Won:

From Agent A’s final vote:

“Agent 2’s answer is exceptionally comprehensive…”

The winning answer wasn’t just longer - it was implementation-ready. It provided:

- Concrete API designs (copy-paste ready)

- Realistic timeline (3 months, week-by-week)

- Effort estimates (for resource planning)

- Success metrics (for measuring impact)

- Clear next steps (for immediate action)

This evolution demonstrates how multi-agent collaboration with voting drives toward not just correct answers, but maximally useful answers.

The Final Answer

Winner: Agent B (GPT-5-mini)

Answer Quality:

The winning answer demonstrated exceptional synthesis of multiple data sources:

Executive Summary (from final answer):

“Goal: Make MassGen the easiest, safest, and fastest way to build multi-agent LLM orchestrations for experimentation and light production use.”

“Highest-impact next-release focus (MVP): (1) stabilize a minimal Adapter/Plugin contract + registry and ship 2–3 official adapters, (2) add structured observability with run/step trace IDs, and (3) ship a lightweight in-process scheduler/task-queue with pause/resume/checkpoint semantics.”

Data Sources Utilized (from answer):

- ✅ Codebase Analysis: “The massgen code tree (core concepts: agent, planner, executor), adapters, CLI surface, examples, and tests”

- ✅ GitHub Issues: “A snapshot of open GitHub issues and community requests (categorized: adapters, observability, orchestration, docs, examples)”

- ✅ Market Trends: “Current market trends in multi-agent/LLM orchestration: growth of adapters/plugin ecosystems, demand for observability/traceability, lightweight orchestration…”

Prioritized Recommendations:

The answer provided a detailed roadmap with:

- Top User Request Themes: Adapter/Plugin system (high), Observability (high), Orchestration primitives (high)

- Codebase Strengths and Gaps: Identified modular core as strength, lack of adapter contract as gap

- Priority 1 (MVP): Adapter API, Structured Observability, Lightweight Scheduler

- Priority 2 (Near-term): Example apps, Reproducibility, Cost-aware routing

- Priority 3 (Strategic): Replay UI, Security/governance, Autoscaling

Concrete Deliverables:

The answer included implementation-ready artifacts:

- Python API sketches for

BaseAdapter,LLMAdapter,VectorStoreAdapter AdapterRegistryusage examples- JSON logging event schema

- 3-month timeline with effort estimates

- Acceptance criteria for each deliverable

Custom Tool Usage

Tool Registration (from logs):

01:26:23 | INFO | Registered custom tool: fetch_github_issues from massgen/tool/_self_evolution/_github_issue_analyzer.py (category: market_analysis, desc: 'Fetch and analyze GitHub issues for market-driven ...')

Tool Discovery (from logs):

01:26:24 | INFO | Stream chunk [content]: 🔧 Custom Tool: Custom tools initiated (1 tools available): custom_tool__fetch_github_issues

01:26:30 | INFO | 🔍 [DEBUG] Available custom tools: ['custom_tool__fetch_github_issues']

Evidence of Use:

The final answer explicitly references GitHub issue analysis:

- “A snapshot of open GitHub issues and community requests (categorized: adapters, observability, orchestration, docs, examples)”

- Categorization by theme matches custom tool output format

- Engagement-based prioritization aligns with tool’s analysis capabilities

Comparison: Without the custom tool, agents would have attempted web search scraping, which would have:

- Failed to access issue metadata (labels, reactions, comments)

- Provided unstructured, incomplete data

- Lacked reliable categorization and engagement analysis

Self-Evolution Demonstrated

Self-Evolution through Market Analysis ✅

This case study demonstrates how MassGen can autonomously drive its own product roadmap through:

- User Feedback Analysis: Custom tool fetches and categorizes GitHub issues to understand user needs

- Market Intelligence: Web search provides competitive landscape and trend analysis

- Codebase Understanding: Filesystem access enables gap analysis between current state and user needs

- Data-Driven Prioritization: Synthesis of all sources produces actionable, prioritized recommendations

- Implementation-Ready Output: Provides concrete API designs, timelines, and acceptance criteria

Self-Evolution Pipeline:

GitHub Issues (User Needs)

+ Market Trends (Competitive Landscape)

+ Codebase Analysis (Current Capabilities)

→ Prioritized Feature Roadmap

→ Implementation Artifacts

(conclusion)=

🎯 Conclusion

Why Custom Tools Enable Self-Evolution

The v0.1.1 custom tools feature is transformative for self-evolution because it:

- Lowers Integration Barrier: Users can add domain-specific capabilities with small amount of Python code

- Enables Data Access: Custom tools provide structured API access (vs. unstructured web scraping)

- Maintains Type Safety: Automatic schema generation from type hints ensures correctness

- Works Everywhere: Cross-backend compatibility means tools work with any model

- Composes with Existing Tools: Custom tools + web search + filesystem = powerful synthesis

Broader Implications

For MassGen Development:

- This case study indicates MassGen can now autonomously analyze user feedback

- Aspects of market-driven development can become automated, not manual

- Product roadmap can be continuously refined based on real-world data

For MassGen Users:

- Extend agents with proprietary APIs (databases, internal tools, etc.)

- Build domain-specific workflows without modifying MassGen core

- Combine multiple data sources for comprehensive analysis

Comparison to Baseline

| Capability | Pre-v0.1.1 | v0.1.1 Custom Tools |

|---|---|---|

| GitHub Issue Analysis | ❌ Web scraping only | ✅ Structured API access |

| Multi-Source Synthesis | ⚠️ Limited (web + code) | ✅ Comprehensive (API + web + code) |

| Extension Mechanism | ❌ Requires backend impl | ✅ Simple Python function |

| Type Safety | ❌ Manual validation | ✅ Automatic from hints |

| Cross-Backend | ❌ Backend-specific | ✅ Works everywhere |

| Self-Evolution | ⚠️ Basic (web research only) | ✅ Advanced (API + analysis) |

Success Metrics

✅ Custom Tool System Works: Tool registered and discovered correctly

✅ Tool Discovery: Agents found and used custom_tool__fetch_github_issues

✅ Multi-Source Analysis: Combined GitHub API + web search + filesystem

✅ Structured Data Access: Tool provided categorized, engagement-ranked issues

✅ Self-Evolution: Produced actionable, data-driven product roadmap

✅ Multi-Agent Collaboration: Both agents used tools; consensus reached via voting

Next Steps for Self-Evolution

This case study demonstrates market-driven self-evolution. The custom tools system enables progression to more advanced capabilities, which will be explored in future versions:

- Autonomous PR Submission: Creating GitHub PRs with agent-proposed features

- Autonomous Code Review: Running tests, linters, and code analysis on agent PRs

- Full Development Loop: Complete autonomous cycle (identify need → implement → test → submit → review)

(status-tracker)=

📌 Status Tracker

- Planning phase completed

- Features implemented

- Testing completed

- Demo recorded (execution log available)

- Results analyzed

- Case study documented